Science

Enhancing Human-AI Collaboration: Insights from Aaron Roth

On October 23, 2025, the Whiting School of Engineering’s Department of Computer Science hosted a pivotal talk by Aaron Roth, a professor of computer and cognitive science at the University of Pennsylvania. The lecture, titled “Agreement and Alignment for Human-AI Collaboration,” explored innovative approaches to integrating artificial intelligence into crucial decision-making processes, particularly in healthcare.

Roth’s presentation centered around findings from three significant papers: “Tractable Agreement Protocols,” presented at the 2025 ACM Symposium on Theory of Computing, “Collaborative Prediction: Tractable Information Aggregation via Agreement,” and “Emergent Alignment from Competition.” As the use of AI expands across various sectors, Roth highlighted the pressing need for frameworks that enable seamless cooperation between humans and AI systems.

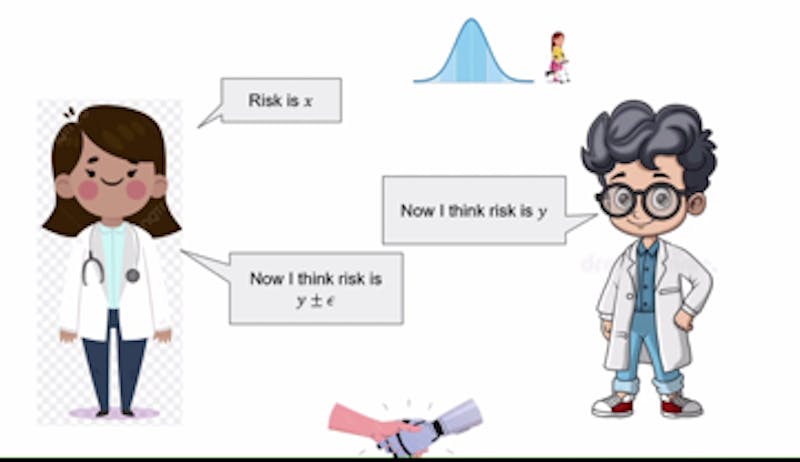

One compelling example Roth provided involved AI assisting physicians in diagnosing patients. The AI could analyze a range of factors, including past diagnoses and symptoms, to make informed predictions. Subsequently, the physician evaluates these predictions, leveraging their own expertise and clinical insights. Should a disagreement arise between the AI’s suggestions and the doctor’s assessment, Roth proposed a structured dialogue process where both parties could iterate their perspectives. This back-and-forth exchange continues until an agreement is reached, based on the understanding that each party possesses knowledge the other does not.

This concept hinges on what Roth termed a common prior, which ensures that both the AI and the human begin with aligned foundational assumptions about the case, despite having different pieces of evidence. Under these conditions, the interaction is framed as Perfect Bayesian Rationality, where each participant is aware of the other’s knowledge base but lacks the specific details.

Despite its potential, Roth acknowledged that achieving a common prior is challenging, especially in complex scenarios involving multifaceted data, such as hospital diagnostic codes. To address these complications, he introduced the idea of calibration, likening it to a test that verifies whether a weather forecaster is accurate. “You can sort of design tests such that they would pass those tests if they were forecasting true probabilities,” Roth noted. Calibration allows for a more dynamic interaction, where the AI’s predictions adapt based on the physician’s feedback.

For instance, if an AI estimates a 40% risk associated with a treatment, and the doctor assesses it at 35%, the AI would adjust its next prediction to fall between these two values, fostering a collaborative approach. This iterative process aims to expedite mutual agreement.

Roth also addressed scenarios where the AI’s objectives might diverge from those of the doctor, particularly if the AI is developed by a pharmaceutical company with its own interests. In such cases, he advised that physicians consult multiple large language models (LLMs) to mitigate potential biases. Each LLM would present its recommendations, and doctors would then determine the most suitable treatment option, thus encouraging competition among AI providers. This competitive landscape would likely drive improvements in AI alignment and reduce biases.

The discussion concluded with Roth’s reflections on the concept of real probabilities, which represent the actual conditions of the world. Although precise probabilities can be advantageous, he argued that it is often sufficient to maintain unbiased estimates under specific conditions. By leveraging data effectively, AI and healthcare professionals could collaboratively arrive at reliable decisions regarding treatments and diagnoses.

Roth’s insights underscore the evolving relationship between human intuition and machine learning, highlighting the importance of developing frameworks that facilitate constructive collaboration in critical fields like healthcare.

-

Science1 month ago

Science1 month agoIROS 2025 to Showcase Cutting-Edge Robotics Innovations in China

-

Lifestyle1 month ago

Lifestyle1 month agoStone Island’s Logo Worn by Extremists Sparks Brand Dilemma

-

Science2 weeks ago

Science2 weeks agoUniversity of Hawaiʻi at Mānoa Joins $25.6M AI Initiative for Disaster Monitoring

-

Health1 month ago

Health1 month agoStartup Liberate Bio Secures $31 Million for Next-Gen Therapies

-

World1 month ago

World1 month agoBravo Company Veterans Honored with Bronze Medals After 56 Years

-

Politics4 weeks ago

Politics4 weeks agoJudge Considers Dismissal of Chelsea Housing Case Citing AI Flaws

-

Lifestyle1 month ago

Lifestyle1 month agoMary Morgan Jackson Crowned Little Miss National Peanut Festival 2025

-

Health1 month ago

Health1 month agoTop Hyaluronic Acid Serums for Radiant Skin in 2025

-

Science1 month ago

Science1 month agoArizona State University Transforms Programming Education Approach

-

Sports1 month ago

Sports1 month agoYamamoto’s Mastery Leads Dodgers to 5-1 Victory in NLCS Game 2

-

Top Stories1 month ago

Top Stories1 month agoIndonesia Suspends 27,000 Bank Accounts in Online Gambling Crackdown

-

Business1 month ago

Business1 month agoTruist Financial Increases Stake in Global X Variable Rate ETF