Top Stories

Lawsuit Claims Meta Prioritized Profits Over Child Safety

UPDATE: A bombshell lawsuit against Meta, the parent company of Instagram and Facebook, alleges the tech giant allowed sex-trafficking posts to thrive on its platforms, prioritizing profits over the safety of children. The filing, submitted in Oakland’s U.S. District Court, claims that Meta had a “17x” policy, permitting accounts to post content related to sexual solicitation or prostitution 16 times before being suspended.

The chilling allegations come as part of a broader case involving Meta, Google’s YouTube, Snap, and TikTok, filed by children, parents, and school districts, including those in California. Plaintiffs accuse these companies of intentionally addicting minors to their platforms while being aware of the significant harm they cause.

According to the court documents, Meta’s internal communications reveal a disturbing reality: nearly 2 million minors were recommended to adults seeking to sexually groom children through Instagram in 2023. The filing further claims that over 1 million potentially inappropriate accounts were suggested to teen users in a single day in 2022.

The lawsuit asserts that despite earning $62.4 billion in profit last year, Meta refused to allocate necessary resources to protect children. “Meta simply refused to invest resources in keeping kids safe,” the filing states. It also criticizes the company for misleading parents and educators about the dangers of its platforms.

In response to these allegations, a Meta spokesperson stated, “We strongly disagree with these allegations, which rely on cherry-picked quotes and misinformed opinions.” They emphasized the company’s efforts to protect teens, including the introduction of Teen Accounts with built-in protections.

However, the lawsuit paints a different picture. It claims that internal documents show Meta had only about 30% of the staffing needed to effectively review child exploitation content, and highlights that even when their AI flagged explicit material with 100% confidence, the company did not automatically delete it due to fears of “false positives.”

The filing also indicates that Meta delayed implementing privacy measures for minors, concerned that doing so would reduce user engagement. It wasn’t until the end of 2022 that default privacy settings for all teen accounts were applied, allowing countless adult-minor interactions to occur unchecked.

The case is still in the evidence-gathering phase, but the implications are profound. Plaintiffs are seeking unspecified damages and a court order to halt the alleged harmful practices while demanding that companies warn users and parents about the addictive nature of their products.

In previous statements, Meta’s CEO, Mark Zuckerberg, claimed to have increased resources for child safety. Yet, the lawsuit alleges internal messages contradict this, suggesting the company prioritized engagement metrics over user safety.

The impact of social media on children’s mental health is also under scrutiny, with the filing alleging that platforms like Instagram contribute to a “youth mental health crisis” in schools nationwide. Internal Meta research reportedly found that teens feel “hooked” despite the negative effects.

As this case unfolds, the focus remains on how tech giants manage user safety, particularly for vulnerable populations like children. The implications of these allegations could reshape how social media platforms operate and enforce safety measures for their youngest users.

This urgent situation continues to develop, and many are calling for immediate action from both lawmakers and tech companies to ensure the safety of children online. Stay tuned for further updates as this critical case progresses.

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi at Mānoa Joins $25.6M AI Initiative for Disaster Monitoring

-

Science2 months ago

Science2 months agoIROS 2025 to Showcase Cutting-Edge Robotics Innovations in China

-

Science2 weeks ago

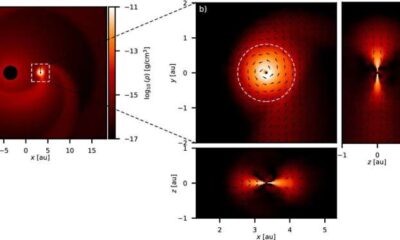

Science2 weeks agoALMA Discovers Companion Orbiting Red Giant Star π 1 Gruis

-

Lifestyle2 months ago

Lifestyle2 months agoStone Island’s Logo Worn by Extremists Sparks Brand Dilemma

-

Health2 months ago

Health2 months agoStartup Liberate Bio Secures $31 Million for Next-Gen Therapies

-

World2 months ago

World2 months agoBravo Company Veterans Honored with Bronze Medals After 56 Years

-

Lifestyle2 months ago

Lifestyle2 months agoMary Morgan Jackson Crowned Little Miss National Peanut Festival 2025

-

Politics2 months ago

Politics2 months agoJudge Considers Dismissal of Chelsea Housing Case Citing AI Flaws

-

Health2 months ago

Health2 months agoTop Hyaluronic Acid Serums for Radiant Skin in 2025

-

Science2 months ago

Science2 months agoArizona State University Transforms Programming Education Approach

-

Sports2 months ago

Sports2 months agoYamamoto’s Mastery Leads Dodgers to 5-1 Victory in NLCS Game 2

-

Sports2 months ago

Sports2 months agoMel Kiper Jr. Reveals Top 25 Prospects for 2026 NFL Draft